From the depth images captured by IR camera, we can derive point clouds which show the 3D shape of the target. An example of point clouds is shown in figure 1. An registration issue arises when we have 2 point clouds of same scene captured by different camera from different point of view. The overall goal is to find a transformation which can bring one point clouds as close to another clouds as possible. The most common method is called Iterative Closest Points (ICP) and various variants of it have been well developed.

| Figure 1 Point clouds produced from Kinect depth data |

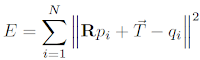

We will call the first set of points model points, and the other set data points. The model point set are denoted Q = {q1, q2, q3,...,qn} and data point set P = {p1, p2, p3,...,pn}. We can set an objective function (error metric) E to evaluate the distance between two set of points. A popular metric is sum of squared errors:

which is squared distance from points in one cloud to their nearest neighbours in the other after the transformation τ.

There are various kind of transformation we can apply including rigid or non-rigid depends on the task. Here we use only rotation (R) and translation (T) only since the scene (patient) doesn't deform much and it keeps the calculation realistic and simple. Therefore, the objective function can be rewritten as:

Please keep in mind that the correspondence of model and data points are unknown. To this end, the ICP try to approximate the correspondence based on the nearest neighbour before transformation τ. The ICP performs following steps iteratively:

- Matching: for every data point the nearest neighbour in the model point set is found.

- Minimization: the objective function is minimised and the transformation parameters are estimated.

- Transform the data points using the estimated transformation.

- Iterate (re-matching the new data point with model point).

The algorithm is terminated based on the predefined number of iterations or relative change of objective function.

Reference:

Hans, Kjer and Jakob Wilm, 2010. Evaluation of surface registration algorithms for PET motion correction. Bachelor theis, Technical University of Denmark.

Reference:

Hans, Kjer and Jakob Wilm, 2010. Evaluation of surface registration algorithms for PET motion correction. Bachelor theis, Technical University of Denmark.

I believe that this step probably wouldn't be necessary if the two Kinect sensors are calibrated wrt to each other. Any points at one frame-of-reference can be automaticaly transformed to the other frame-of-reference by using the matrix representing the relative pose of the two frames. Not sure if this is what you've had in mind. George

ReplyDeleteI was thinking that after transformation finish, the two point clouds may not overlay well, then the ICP can be used to fine register the points.

DeleteI will focus on calibration now and see if the ICP is necessary when I can get the transformation result.

BTW, when can I get the Kinects:)